Here’s the situation: I have accounts on multiple computers. Who doesn’t have this problem these days? (If you’re in the top 1% of the world’s richest who own a computer, that is.) I have a netbook running Xubuntu, a desktop triple booting Ubuntu, Debian unstable, and Windows Vista, and several accounts at school. I want to be able to sit at any one of these computers and be as productive as possible.

My solution has several parts to it. For me to be productive, I want to have access to several different kinds of information, including:

- bookmarks

- email, including contacts

- documents

For each kind of information, I have a different way of accessing it, depending on the level of configuration I can perform on the particular computer I am using.

Bookmarks

I store my bookmarks on Google Bookmarks. Google bookmarks has a web interface for accessing and managing bookmarks. Bookmarks are stored by URL, so the URL is not editable except by deleting and creating a new bookmark. Otherwise, I can edit the title of the page, and tag the bookmark to organize it how I please.

The first software tool I used to access Google Bookmarks was GMarks. (GMarks is free software.) GMarks is a Firefox extension, so I can install it on any account where I have access to Firefox. Thankfully my school has the Firefox web browser on their lab computers, and they allow students to add their own extensions. GMarks adds a menu to the toolbar with bookmarks pulled from Google Bookmarks. The bookmark tags or labels are used to generate the menu, with the ‘>’ character used to represent subfolders. I prefer to sort my bookmarks by date, so the most recently added ones appear near the top.

More recently I have been using Google Chrome for my web browsing. The software tool I use in this browser is Yet Another Google Bookmarks Extension (YAGBE). As this tool is not free software, I am in the market for a replacement that behaves similarly. YAGBE adds a star to the toolbar next to the URL bar. (This is to the right of the star that is already in the URL bar, which is for Chrome Bookmarks.) This is handy, and a little more compact than the default GMarks behavior. The star turns yellow when you are on a page which you have already bookmarked. Clicking the star reveals a menu with your bookmarks.

Email

The obvious solution to email is to use a provider with IMAP access. I prefer to use a native client to a web client where possible. I use Thunderbird on the machines I administer, and on the school lab computers, I have configured Outlook to interface with my personal email as well as my school email.

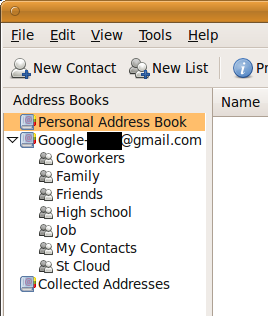

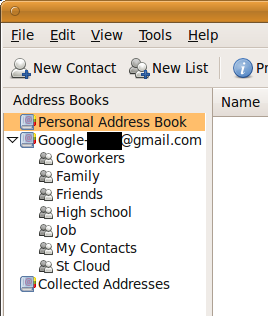

The more interesting part is contacts. At some point I got fed up with all my Thunderbird instances pulling their own contacts into the “Collected Addresses” list. I was also fed up with searching my email inbox to confirm a particular email address for one of my friends. I decided to use Google Contacts (part of gmail) to store all the email addresses for my contacts. Similar to tags, Google allows you to add a contact to multiple groups.

There is a Thunderbird extension called (you guessed it) Google Contacts. This extension is free software. If you set up your Gmail account in Thunderbird for email, it will automatically use your username and password to pull in your contacts and add them as another address book. All of the groups from Gmail are created as Thunderbird Mailing Lists, so you can use them to find a contact if you desire. Otherwise, it behaves like the other address books, so you can use autocomplete.

Documents

For my documents, I simply use Dropbox. (This is not free software.) On computers where I can install it, I do. When I cannot, I use their web interface to get the files I need.

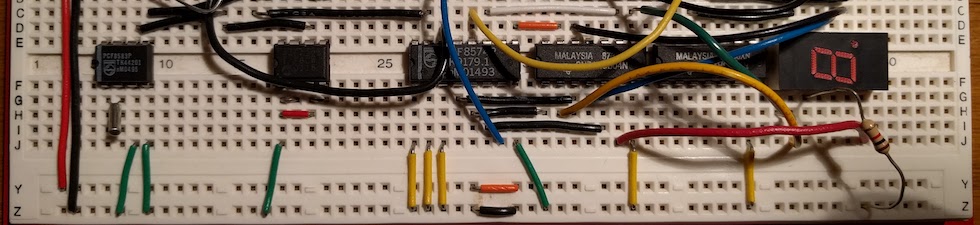

In some situations, I’ll make an exception, and put a document on Google Documents so other people can edit it. I have also used Zoho Notebook to create a paged log of my computer hacking adventures. This allows me to edit the same notebook from whichever computer is running, which is usually not the same as the one that is being experimented on!